TV Game Shows - FAQ

Its not exactly gambling but I always wondered on the Price is Right gameshow what the best strategy to take when spinning the big wheel when you are not last to spin. Assuming you can’t control your spin (completely random outcome), 5 cent increments from $.05 to $1.00, you get one spin or two spins added together, you can’t go over 1.00. At what amount should you not take your second spin so that you can have the best chance to beat the player who spins after you?

The first player should spin again if his first spin is 65 cents or less.

If any of the following conditions are true the second player should spin again.

- His score is less than the first player’s score.

- His score is 50 cents or less.

- His score is 65 cents or less and he has tied the first player.

What is the optimal strategy for the Plinko game on the Price is Right?

From left to right the prizes are $100, $500, $1000, $0, $10000, $0, $1000, $500, $100. I would need to know the exact configuration of pegs on the board to do a perfect analysis but just eyeballing the board (see link above) I strongly feel the player should drop the puck directly over the $10,000 prize. Although it is bordered by two zeros all other prizes pale in comparison to the top prize. So the player’s strategy should be to maximize the probability of the top prize by dropping it directly above. To confirm to deny my hypothesis I did a search and there are lots of links devoted to the study of this game. This (www.amstat.org/publications/jse/v9n3/biesterfeld.html) is one of the better ones, which agrees with my conclusion. It states in part that the expected value of dropping the puck in the middle is $2557.91, on either side of the middle is $2265.92, and tapering off as you move away further from the center.

On the game show Let’s Make a Deal, there are three doors. For the sake of example, let’s say that two doors reveal a goat, and one reveals a new car. The host, Monty Hall, picks two contestants to pick a door. Every time Monty opens a door first that reveals a goat. Let’s say this time it belonged to the first contestant. Although Monty never actually did this, what if Monty offered the other contestant a chance to switch doors at this point, to the other unopened door. Should he switch?

Yes! The key to this problem is that the host is predestined to open a door with a goat. He knows which door has the car, so regardless of which doors the players pick, he always can reveal a goat first. The question is known as the "Monty Hall Paradox." Much of the confussion about it is because often when the question is framed, it is incorrectly not made clear the host knows where the car is, and always reveals a goat first. I think put some of the blame on Marilyn Vos Savant, who framed the question badly in her column. Let’s assume that the prize is behind door 1. Following are what would happen if the player (the second contestant) had a strategy of not switching.

- Player picks door 1 --> player wins

- Player picks door 2 --> player loses

- Player picks door 3 --> player loses

Following are what would happen if the player had a strategy of switching.

- Player picks door 1 --> Host reveals goat behind door 2 or 3 --> player switches to other door --> player loses

- Player picks door 2 --> Host reveals goat behind door 3 --> player switches to door 1 --> player wins

- Player picks door 3 --> Host reveals goat behind door 2 --> player switches to door 1 --> player wins

So by not switching the player has 1/3 chance of winning. By switching the player has a 2/3 chance of winning. So the player should definitely switch.

For further reading on the Monty Hall paradox, I recommend the article at Wikipedia.

My question is about a problem that is known as the "two envelope paradox". You are on a game show. In front of you are 2 envelopes, each containing an unknown amount of cash. You are told that 1 envelope has twice as much money as the other. You are now asked to choose an envelope. You choose one. It contains $50,000. Now you are told that you can keep the envelope you picked, or swap for the other one. Should you swap? Knowing ahead of time that you could swap, then it doesn’t matter, as you would just choose the envelope you ultimately want. But because you only find out about swapping after you choose an envelope, then the original selection and the option to swap are 2 independent events, correct? That said, when deciding to swap or not, the other envelope contains either twice as much or half as much as what you currently have. So it has either $100K or $25k. Since there is a 50% chance of either occurring, the Expected Value of the other envelope is $62,500. Generically speaking, if we let x = the amount you originally selected, then the other envelope's EV is 1.25x. Therefore it is always correct to swap. Is this correct? Thank you.

I'm very familiar with this problem. I address it on my web site of math problems, problem number 6. There I address the general case, including not looking in the first envelope at all. However to answer your question we can not ignore the venue of where the game is taking place. You said it was a "game show." On most game shows $50,000 is a nice win. Few contestants on the Price is Right ever make it that high. I would guess that fewer than 50% of players on Who Wants to be a Millionaire get that high. Meanwhile wins of $25,000 are not unusual on game shows. Cars are won routinely on the Price is Right, which have values of about $25,000. The $32,000 level is a common win on Who Wants to be a Millionaire. The average win on Jeopardy per show is roughly $25,000. The great Ken Jennings averaged only $34,091 over his 74 wins. So, my point is that $50,000 is a nice win for a game show, and $100,000 wins are seen much less often that $25,000. Thus as a game show connoisseur it is my opinion that the other envelope is more likely to have $25,000 than $100,000. So I say in your example it is better to keep the $50,000. It also goes to show you can never assume the chances that the other envelope has half as much or twice as much are exactly 50/50. Once you see the amount and put it in the context of the venue it is being played you can make an intelligent decision on switching, which throws the 1.25x argument out the window.

My question is regarding the game show, Deal or No Deal, very popular in Australia and about to come to England. The contestant is shown twenty-six numbered briefcases, each containing a hidden amount of money, ranging from 50 cents to $200,000 as below.

- $0.50

- $1

- $2

- $5

- $10

- $25

- $50

- $75

- $100

- $150

- $250

- $500

- $750

- $1,000

- $1,500

- $2,000

- $3,000

- $5,000

- $7,500

- $10,000

- $15,000

- $30,000

- $50,000

- $75,000

- $100,000

- $200,000

The contestant selects one of the briefcases to be THEIR suitcase. Through a process of elimination, by opening the other suitcases, they try and work out how much money is their case, or whether it would be wiser to take a "Bank offer". The Bank Offers are based on, but not equivalent to, the arithmetic mean of the remaining briefcases. So, if there are mainly large valued briefcases remaining, there is a high chance that the contestant’s briefcase is valuable, and so the Bank Offer will be generous. Conversely, if the player has been less fortunate and opened the more valuable briefcases, then the Bank Offer will be low. What would be the best strategy to employ if you were a contestant on this game? A non-mathematical gut instinct strategy would be ignore the Bank offers and carry on opening cases until either the $200,000 was opened and eliminated, or both the $100,000 and $75,000 were opened and eliminated. What’s the math behind this game, Wizard?

Deal or No Deal just started here in the U.S.. The rules sound the same except our prizes go up to a million dollars as follows.

- 0.01

- 1

- 5

- 10

- 25

- 50

- 75

- 100

- 200

- 300

- 400

- 500

- 750

- 1000

- 5000

- 10000

- 25000

- 50000

- 75000

- 100000

- 200000

- 300000

- 400000

- 500000

- 750000

- 1000000

Here is the flow of the game:

- Player picks one case for himself

- Player opens up six of the remaining 25 cases.

- Banker makes an offer.

- If player declines he opens five more of the 19 remaining cases.

- Banker makes an offer.

- If player declines he opens four more of the 14 remaining cases.

- Banker makes an offer.

- If player declines he opens three more of the 10 remaining cases.

- Banker makes an offer.

- If player declines he opens two more of the 7 remaining cases.

- Banker makes an offer.

- If player declines he opens one more of the remaining cases.

- Keep repeating steps 11 and 12 until player accepts an offer or player has the last unopened case.

The following chart plots the player’s expected value and the banker’s offer.

The most obvious thing to be learned from these three charts are that the first four to six bank offers are terrible deals. The average suitcase has $131,477.54 before any are opened. To only offer $9000 to $13000 the first stage is a deal only a fool would make. However gradually the offers get better. Game 2 shows us the expected values were almost the same as the banker offers towards the end of the game when the player’s expected value was fairly low. However in games 1 and 3 when the expected values were higher the banker apparently was trying to take advantage of the risk averse nature of most people when large amounts are involved. I don’t know if it mattered but the contestant in game 2 appeared to be a gambler who wanted to win big. Based on comments by the host, who communicates to the banker by phone, the banker does appear to take the contestants words and actions into consideration. If I were in the banker’s shoes I would act much the same.

If the player is neither risk averse nor risk prone, and also ignoring tax implications, the player should keep refusing banker offers until one exceeds the average of the remaining suitcases. For most people the progressive nature of the income tax code favors taking a deal. As I have said before I would roughly say the value of money is proportional to the log of the amount. So the more wealth you have going into the game the more inclined you should be to gamble and refuse the banker offers. With such large amounts involved, no strategy will fit everybody. However I can fairly confidently say that the player should refuse the first four to six offers and then take the offers on a case by case basis (pun intended).

Links:

You can watch Deal or No Deal at NBC.com.

Archive of past shows.

Watching "Deal or no Deal". I realize the "offer" from the banker is just the remaining values of the cases divided by the number of cases [give or take rounding]. Is there ANY strategy to this game at all, or is "the deal" always just an OK thing to take? Does it depend on how many cases you have to open or anything?

As my December 26, 2005 column shows the banker offer is usually much less than the average of the remaining cases. However, hypothetically, if it always were, then every strategy would have the same expected value. The player would be indifferent at every offer.

at the start of "deal or no deal" the odds of picking the 1,000,000 dollar case is 1 in 26. after eliminating all the cases exept 1, what are the odds that my case contains the million dollars. is it 50-50 or still 1 in 26 ?

50-50

In your April 5, 2006 column you state that if there are only two cases left in Deal or no Deal and the million dollars is still in play then the probability my case has the million is 50-50. I disagree. Isn’t this just a variation of the Monty Hall problem? That is, the million is more likely to be on the stage than in his case?

No. I’m getting lots of people arguing with me about this one. Many writers claim that probabilities can not change if additional information is introduced. So if the probability starts at 1 in 26 then it must stay there. Contrary to what betting system salesmen say, probabilities indeed can change as additional information is introduced. I don’t want to try to teach basic probability here but any college level math book on conditional probability or Bayes’ Theorem should cover this topic nicely.

Let me explain what happened on Let’s Make a Deal. The contestant would choose one of three curtains. One would contain a very valuable prize and the other two smaller prizes. For the sake of argument let’s say behind one curtain was a car and behind the other two a goat. Then Monty would always, I repeat ALWAYS, open up one of the two unchosen curtains to reveal a goat. After hundreds of shows this would imply that Monty Hall (the host) knew where the car was and deliberately opened a curtain that revealed a goat. Obviously when the player chose his curtain the probability it held the car was 1/3 and the probability one of the two unchosen curtains held the car was 2/3. Monty is then predestined to open an unchosen curtain containing a goal. Predestined is the key word here. Because Monty can not open the player’s curtain at this stage the probability of the player’s curtain reveals the car stays at 1/3. The probability an unchosen curtain reveales the car remains at 2/3, however it is now all on one curtain. So after a goat is revelead the probability the player’s curtain has the car is 1/3 and the probability the other unopened curtain has the car is 2/3, making switching a wise choise.

The following table shows all the possible outcomes. In the case where the player chose the curtain with the car I had Monty opening a curtain arbitrarily. You can see that not switching results in a 1/3 probability of winning, and switching results in a 2/3 probability of winnning.

Let’s Make a Deal

| Player Chooses |

Car | Curtain Opened |

Probability | Win by Switching |

| 1 | 1 | 1 | 0% | n/a |

| 1 | 1 | 2 | 5.56% | N |

| 1 | 1 | 3 | 5.56% | N |

| 1 | 2 | 1 | 0% | n/a |

| 1 | 2 | 2 | 0% | n/a |

| 1 | 2 | 3 | 11.11% | Y |

| 1 | 3 | 1 | 0% | n/a |

| 1 | 3 | 2 | 11.11% | Y |

| 1 | 3 | 3 | 0% | n/a |

| 2 | 1 | 1 | 0% | n/a |

| 2 | 1 | 2 | 0% | n/a |

| 2 | 1 | 3 | 11.11% | Y |

| 2 | 2 | 1 | 5.56% | N |

| 2 | 2 | 2 | 0% | n/a |

| 2 | 2 | 3 | 5.56% | N |

| 2 | 3 | 1 | 11.11% | Y |

| 2 | 3 | 2 | 0% | n/a |

| 2 | 3 | 3 | 0% | n/a |

| 3 | 1 | 1 | 0% | n/a |

| 3 | 1 | 2 | 11.11% | Y |

| 3 | 1 | 3 | 0% | n/a |

| 3 | 2 | 1 | 11.11% | Y |

| 3 | 2 | 2 | 0% | n/a |

| 3 | 2 | 3 | 0% | n/a |

| 3 | 3 | 1 | 5.56% | N |

| 3 | 3 | 2 | 5.56% | N |

| 3 | 3 | 3 | 0% | n/a |

Meanwhile in Deal or No Deal nothing is predestined. Let’s assume on Deal or No Deal the amounts remaining were $0.01, $1, and $1,000,000. With three cases left it IS possible that the opened case will contain the million dollars. The following table shows the possible outcomes with three cases left. Remember, the player can not open his own case.

Deal or No Deal

| Player Chooses |

Million $ | Case Opened |

Probability | Win by Switching |

| 1 | 1 | 1 | 0% | n/a |

| 1 | 1 | 2 | 5.56% | N |

| 1 | 1 | 3 | 5.56% | N |

| 1 | 2 | 1 | 0% | n/a |

| 1 | 2 | 2 | 5.56% | Hopeless |

| 1 | 2 | 3 | 5.56% | Y |

| 1 | 3 | 1 | 0% | n/a |

| 1 | 3 | 2 | 5.56% | Y |

| 1 | 3 | 3 | 5.56% | Hopeless |

| 2 | 1 | 1 | 5.56% | Hopeless |

| 2 | 1 | 2 | 0% | n/a |

| 2 | 1 | 3 | 5.56% | Y |

| 2 | 2 | 1 | 5.56% | N |

| 2 | 2 | 2 | 0% | n/a |

| 2 | 2 | 3 | 5.56% | N |

| 2 | 3 | 1 | 5.56% | Y |

| 2 | 3 | 2 | 0% | n/a |

| 2 | 3 | 3 | 5.56% | Hopeless |

| 3 | 1 | 1 | 5.56% | Hopeless |

| 3 | 1 | 2 | 5.56% | Y |

| 3 | 1 | 3 | 0% | n/a |

| 3 | 2 | 1 | 5.56% | Y |

| 3 | 2 | 2 | 5.56% | Hopeless |

| 3 | 2 | 3 | 0% | n/a |

| 3 | 3 | 1 | 5.56% | N |

| 3 | 3 | 2 | 5.56% | N |

| 3 | 3 | 3 | 0% | n/a |

What the Deal or No Deal table shows is that with three cases left the probability the player opens the million dollar case is 1/3 (hopeless to win), the probability a switching player will win is 1/3, and the probability a switching player will lose is 1/3. Thus the odds are the same to switch cases. Once there are only two cases left the probability each case contains the larger prize is 50/50.

Time for another Deal or No Deal question. Let’s say after all the deals from the banker and guest appearances by Celine Dion, you’re left with two suitcases, the $500,000 and the $1,000,000. The banker’s offer will be slightly less than $750,000 I assume. Which would you choose? What if the two briefcase left were the $.01 and $1,000,000 one? I guess it’s all a matter if you’re a gambler or not, and nothing really to do with odds. The reason why I’m asking is I wonder if ANYBODY will ever win $1,000,000 (even if they’ve picked the magic briefcase).

When the prizes become life-changing amounts, the wise player should play conservatively at the expense of maximizing expected value. A good strategy should be to maximize expected happiness. A good function to measure happiness I think is the log of your total wealth. Let’s take a person with existing wealth of $100,000 who is presented with two cases of $0.01 and $1,000,000. By taking “no deal” the expected happiness is 0.5*log($100,000.01) + 0.5*log($1,100,000) = 5.520696. Let b be the bank offer where the player is indifferent to taking it.

log(b) = 5.520696

b = 105.520696

b = $331,662.50.

So this hypothetical player should be indifferent at a bank offer of $331,662.50. The lesser your wealth going into the game the more conservatively you should play. Usually in the late stages of the game the bank offers are close to expected value, sometimes a little more bit more. The only rational case where a player could win the million is if he had a lot of wealth going into the game and/or the bank offers were unusually stingy. The producers seem to like hard-working middle class people, so we’re unlikely to see somebody who can afford to be cavalier when large amounts are involved. I have also never seen the bank make offers under 90% of expected value late in the game. The time when we will see somebody win the million is when a degenerate gambler gets on the show who can’t stop. When that happens I will be rooting for the banker.

This is a follow up on Deal or No Deal, which I watched for the first time recently. Your analysis assumes that the house doesn’t know the value of the money in the suitcase. However, in the show I watched, in the endgame both contestants had selected a valuable case, and both were offered (or would have been offered, as one had already quit) above expected value (EV) deals. In the most extreme case, a player "would have been" offered $687K when the two dollar amounts left were $500K and $750K. The only rational explanation for this is that the banker knows the value of the player’s suitcase and the deals offered are based on that.

Just my two cents, and no reply is necessary.

Thanks for not expecting a reply, but I usually do reply to game show questions. They claim in every episode that the amounts in the cases are randomly placed, and that neither Howie, nor the banker, know the results. This was never claimed on Let’s Make a Deal, where Monty Hall obviously did know. I too have seen the banker offer more than expected value as the last offer, especially when large amounts are involved. In my strong opinion, this is not because the banker knows what is inside the player’s case. In the 1950s there was a huge scandal when it became known that the show 21, as well as others, were fixed. There is no compelling reason to ruin a successful show, and the integrity of all game shows, to skim some prize money via the bank offers.

I can offer three theories why the banker sometimes offers more than the average of the remaining cases.

- The show tries to portray the banker as sweating the money in his office. Howie Mandel is often commenting on the banker’s mood and tone of voice. Maybe it makes the show more dramatic to think of the banker as a risk-averse bean counter, preferring to cut his losses, than risk giving out a big prize.

- The real banker truly is risk-averse. This is getting out of my area of expertise, but from my understanding, game and reality shows are usually produced by a company independent from the television network. These smaller companies will seek out an insurance company to mitigate the risk of contestants winning the larger prizes. In such a case, the insurance company would be the real banker, and may be influencing the behavior of the banker on the show. The insurance companies that insure odd-ball stuff like this are not gigantic, and may prefer playing it safe when large amounts are involved.

In your example, the banker offer was 9.92% above expected value. If the banker were following the Kelly Criterion, such an offer would have been made with a total bankroll of only $782,008, which is less than the maximum prize. No self-respecting insurance company would be that conservative. Clearly, this reason alone cannot justify the offer in your example.

- The show is trying to make the contestants look stupid and greedy. Shows like Are You Smarter than a Fifth Grader and the Tonight Show's “Jaywalking” would not be successful if we didn’t find some satisfaction in laughing at the trivia-challenged. The shows Friend or Foe and The Weakest Link were outstanding at exposing greed in human nature. I must confess a sense of schadenfreude when a contestant refuses an above expected value offer, and walks with the lower amount in his case.

I tend to think the reason is a combination of these three reasons, but mainly the third.

If I ended this answer here, I’m sure I would get comments, questioning whether the hypothetical banker offers would have really been made. The implication being that they are puffed up for dramatic effect. I have recorded the specifics of 13 games. In one of them, with three cases left ($1,000; $5,000; and $50,000), the average was $18,667, and the offer was $21,000. That is 12.5% over the expected value. In another show, with two cases left ($400 and $750,000), the average was $375,200, and the offer was $400,000. That is 6.6% above expected value. So, I see no reason to question the integrity of the hypothetical offers.

Links:

Deal or no deal formula: This page shows old, and new, formulas for calculating the banker offer, based on the free game at the Deal or No Deal web site.

How much would you bet, in each person’s shoes, in Final Jeopardy, with these scores:

Player A: $10,000

Player B: $8,000

Player C: $3,500

Let me start by making some assumptions. First, I’m going to assume that the three players have no prior knowledge of betting behavior in Final Jeopardy, except the probabilities of being correct in the table presented. Second, I’m going to assume that knowing the category is of no help. Third, I’m also going to assume that all three contestants want to go for the win, not wishing to take another player along in a tie.

Let’s start with player C. He should anticipate that A might bet $6001, to stay above B if B is right. However, if A is wrong, that would lower him to $3999. C would need to bet at least $500, and be right, to beat A in such a scenario. However, in my opinion, if you must be right to win, you may as well bet big. So if I were C I would bet everything.

B is torn between betting big or small. A small bet should be $999 or less, to stay above C if C is correct. The benefit of a small bet is staying above C no matter what, hoping that A will go big, and be wrong. A big bet does not necessarily have to go the whole way, but it may as well. The benefit of a big bet is hoping that either A goes small, or goes big and is wrong, but both require B to be right.

A basically wants to go the same way as B. A small bet for A can be anything from $0 to $1000, which will stay above B if B bets $999. A big bet should be $6001, to guarantee a win if A is right, and still retain hope if B goes big, and all three players are wrong.

To help with the probabilities of the eight possible outcomes of right and wrong answers, I looked at the Final Jeopardy results for seasons 20 to 24, from j-archive.com(no longer available). Here is what the results look like, where player A is the leader, followed by player B, and C in last.

Possible Outcomes in Final Jeopardy

| Player A | Player B | Player C | Probability |

| Right | Right | Right | 21.09% |

| Right | Right | Wrong | 9.73% |

| Right | Wrong | Right | 10.27% |

| Wrong | Right | Right | 8.74% |

| Right | Wrong | Wrong | 13.33% |

| Wrong | Right | Wrong | 10.27% |

| Wrong | Wrong | Right | 8.63% |

| Wrong | Wrong | Wrong | 17.92% |

Using the kind of game theory logic I explain in problem 192 at my site mathproblems.info, I find that A and B should randomize their strategy as follows.

Player A should bet big with probability 73.6% and small with probability 26.4%.

Player B should bet big with probability 67.3% and small with probability 32.7%.

Player C should bet big with probability 100.0%.

If this strategy is followed, the probability of each player’s winning will be as follows:

Player A: 66.48%

Player B: 27.27%

Player C: 6.25%

As an aside, based on the table above, the probability of the leader getting Final Jeopardy correct is 54.4%, for the second-place player, 49.8%, and 48.7% for the third-place player. The overall probability is 51.0%.

As a practical note, players do have knowledge of betting behavior. In my judgment, players tend to bet big more often than mathematically justified. Interestingly, I find wagering in Daily Double to be too conservative than mathematically justified. One of the reasons I believe Ken Jennings did so well was aggressive wagering on the Double Doubles. Anyway, in reality if I were actually on the show, I would assume the other two players would bet aggressively. So my actual wagers would be $6000 as A (being nice to B), $0 as B, and $3495 as C (leaving a little un-bet, in case A foolishly bets everything or all but $1, and is wrong).

Before somebody challenges me about how one could draw a random number in the actual venue, let me suggest the Stanford Wong strategy of using the second hand of your watch to draw a random number from 1 to 60.

A new game show has premiered in the UK, called the "Colour of Money." A lone contestant is randomly given a target amount, which has been known to range from £55,000 to £79,000. To earn money, he picks 10 of 20 bank machines, each containing £1,000 to £20,000, in even increments of £1,000. When he picks a machine, it will begin counting upwards from £1,000, in £1,000 increments.

The player may yell "Stop!" at any time, and he will bank the amount showing on screen. If the player does not stop in time, and the machine runs out of money, then he banks nothing. A hostess provides statistics, such as number of machines left to pick, amount left to earn, average amount needed per machine to win, and what amounts remain in the machines.

A player can "play the gaps," in that if a run of machines have been picked, say, £4k, £5k, and £6k, a machine would be guaranteed to make it to £7,000 once it passes the £3,000 mark. My question is, what kind of strategy should a player use?

This is the kind of thing I could spend weeks analyzing. Unfortunately, I read your message almost three months after you wrote, due to a large backlog of “ask the Wizard” questions. The Wikipedia page seems to indicate that that show was a flop, and was canceled. However, it still makes for an interesting problem.

The hostess conveniently tells you the average amount you need per remaining machine to reach your game. After hours of scribbling, I can’t come up with anything better than setting a stopping goal of about 25% higher than the required average. That is just an educated guess, so please don’t ask me to prove it is optimal. As you noted, also ride the gaps, never stopping just before an amount that was already picked.

When there are only two machines left, if the total amount needed is £13,000 or less, I would try to get it all in the second-to-last machine. If £14,000 or more, I would try to get half of it at the next machine.

If they should bring back this show, I hope my UK readers will let me know. This is the kind of puzzle that I could become obsessed with, like the Eternity puzzle, which was coincidentally (or not) also out of the UK.

P.S. Why do you spell "colour" with a u in the UK? It makes no sense to me.

What is the average prize per punch and optimal strategy for the Punch a Bunch game on The Price is Right?

For those not familiar with the rules, they are explained at the Price Is Right web site. Please take a moment to go there if you’re not familiar with the game, because I’m going to assume you know the rules. There are several YouTube videos of the game as well. Here is an old one, which shows a second chance, but the maximum prize at the time was $10,000 only. It is now $25,000.

First, let’s calculate the expected value of a prize that is not paired with a second chance. The following table shows that average is $1371.74.

Punch a Bunch Prize Distribution with no Second Chance

| Prize | Number | Probability | Expected Win |

| 25000 | 1 | 0.021739 | 543.478261 |

| 10000 | 1 | 0.021739 | 217.391304 |

| 5000 | 3 | 0.065217 | 326.086957 |

| 1000 | 5 | 0.108696 | 108.695652 |

| 500 | 9 | 0.195652 | 97.826087 |

| 250 | 9 | 0.195652 | 48.913043 |

| 100 | 9 | 0.195652 | 19.565217 |

| 50 | 9 | 0.195652 | 9.782609 |

| Total | 46 | 1.000000 | 1371.739130 |

Second, calculate the average prize that does have a second chance. The following table shows that average is $225.

Punch a Bunch Prize Distribution with Second Chance

| Prize | Number | Probability | Expected Win |

| 500 | 1 | 0.250000 | 125.000000 |

| 250 | 1 | 0.250000 | 62.500000 |

| 100 | 1 | 0.250000 | 25.000000 |

| 50 | 1 | 0.250000 | 12.500000 |

| Total | 4 | 1.000000 | 225.000000 |

Third, create an expected value table based on the number of second chances the player finds. This can be found using simple math. For example, the probability of 2 second chances is (4/50)×(3/49)×(46/48). The expected win given s second chances is $1371.74 + s×$225. The following table shows the probability and average win for 0 to 4 second chances.

Punch a Bunch Prize Return Table

| Second Chances | Probability | Average Win | Expected Win |

| 4 | 0.000004 | 2271.739130 | 0.009864 |

| 3 | 0.000200 | 2046.739130 | 0.408815 |

| 2 | 0.004694 | 1821.739130 | 8.551020 |

| 1 | 0.075102 | 1596.739130 | 119.918367 |

| 0 | 0.920000 | 1371.739130 | 1262.000000 |

| Total | 1.000000 | 1390.888067 |

So the average win per punch (including additional money from second chances) is $1390.89.

The following table shows my strategy of the minimum win to accept, according to the number of punches remaining. Note the player can get to $1,400 with prizes of $1,000 + $250, + $100 + $50 via three second chances.

Punch a Bunch Strategy

| Punches Remaining | Minimum to Stand |

| 3 | $5,000 |

| 2 | $5,000 |

| 1 | $1,400 |

This question was raised and discussed in the forum of my companion site Wizard of Vegas.

What would be the optimal strategy for dividing your money on the game show Million Dollar Money Drop, if you were not sure of the answer?

For the benefit of other readers, let me review the rules first.

- A team of players starts with $1,000,000.

- The team is given a multiple choice question.

- The team is to divide his money among the possible answers. Whatever money is put on the correct answer will move onto the next question.

- The team must completely rule out at least one possible answer by not putting any money on it.

- This process repeats for several rounds. The player is also given one chance to change his mind.

Obviously, if the team is sure of the answer then he should put all his money on the correct answer. If the team can narrow down the answer to two, but assigns each a 50% chance of being correct, then they should divide his money equally between the two choices.

Where it gets more difficult is if the team leans towards one answer but doesn’t completely rule out one or more of the others. Let’s look at an example. Suppose the team determines the probability of each correct answer as follows: A 10%, B 20%, C 30%, D 40%. How should they divide up his money?

I claim the answer is to follow the Kelly Criterion. Briefly, the team should maximize the log of his wealth with every question. To do this, you have to consider how much wealth you already have.

Let’s say your existing wealth, which you have accumulated independently of the show, is $100,000. It is your first question, so you have $1,000,000 of game show money to split up. First eliminate the option with the lowest probability, to conform with the show rules. Then you want to maximize 0.2×log(100,000+b*1,000,000) + 0.3×log(100,000+c*1,000,000) + 0.4×log(100,000+d*1,000,000), where lower-case a, b, and c refer to the portion placed on each answer.

This could be solved with calculus and solving a trinomial equation, trial and error, or my preference, the "goal seek" feature in Excel. Whatever you use, the right answer is to put 18.9% on B, 33.3% on C, and 47.8% on D.

Of course, nobody on the show is going to be able to do all this math in the time allowed, not to mention that you also have to move a lot of bundles of cash as well in that time. My more practical advice is to just divide up the money in proportion to your assessment of the probability of the answer’s being correct, assuming the least likely choice is not a possibility. In the example, that would cause a split of 22.2% on B, 33.3% on C, and 44.4% on D.

This question was raised and discussed in the forum of my companion site Wizard of Vegas.

The Michigan Lottery has a three-player game with the following rules: Is there any positional advantage to going last in this game? What is the optimal strategy for each player? Here is a YouTube video showing the game.

First, there is no positional advantage to acting last. Since the players are kept in a sound-proof booth while any previous players play, order doesn't matter.

Second, there must be a Nash Equilibrium to the game where a strategy to stand with a score of at least x points will be superior to any other strategy. The question is finding x.

What I did was ask myself what would be the strategy if instead of a card numbered 1 to 100, each player got a random number uniformly distributed between 0 and 1 and look for the point x where a perfect logician would be indifferent between standing and switching. With that answer, it is easy to apply the answer to a discrete distribution from 1 to 100.

I'll stop talking at this point and let my readers enjoy the problem. See the links below for the answer and solution.

Answer for a continuous distribution from 0 to 1.

Answer for a discrete distribution from 1 to 100.

For my solution, please click here (PDF).

This question was raised and discussed in my forum at Wizard of Vegas.

Assuming no knowledge of the price of anything, what is the optimal strategy for the Race Game on The Price is Right?

For those unfamiliar with the rules, the player is given four price tags and must place them on four items. When he is done he pulls a lever which gives the number of correct matches. If the player has less than four correct, then he may rearrange the tags and try again. The player may try as many times as he can within 45 seconds.

My advice is to always submit a selection that has a chance of winning, given the previous history of selections and scores. If the first score is 0, then don't reverse two sets of two tags, but instead move everything by one position in either direction.

If you're not able to do the logic on the spot, then I spell it out for you below. To use this strategy, assign the different tags the letters A, B, C, and D. Then place them in the order shown, from left to right on the stage. Always start with ABCD. Then look up the score history below and choose the sequence of tags indicated for that score sequence.

If 0, then BCDA

If 0-0, then CDAB

If 0-0-0, then DABC (must win)

If 0-1, then BDAC

If 0-1-0, then CADB (must win)

If 0-1-1, then CDBA

If 0-1-1-0, then DCAB (must win)

If 0-2, then BADC

If 0-2-0, then DCBA (must win)

If 1, then ACDB

If 1-0, then BDCA

If 1-0-0, then CABD

If 1-0-0-1, then CBAC (must win)

If 1-1, then BDCA

If 1-1-0, then CABD

If 1-1-0-1, then CBAC (must win)

If 1-1-1, then BCAD (must win)

If 2, then ABDC

If 2-0, then BACD (must win)

If 2-1, then ACBD

If 2-1-0, then DBCA

If 2-1-1, then ADCB

If 2-1-1-0, then CBAD (must win)

The following table shows the probability of each number of total turns. The bottom right cell shows an expected number of turns of 10/3.

Race Game

| Turns | Combinations | Probability | Return |

|---|---|---|---|

| 1 | 1 | 0.041667 | 0.041667 |

| 2 | 4 | 0.166667 | 0.333333 |

| 3 | 8 | 0.333333 | 1.000000 |

| 4 | 8 | 0.333333 | 1.333333 |

| 5 | 3 | 0.125000 | 0.625000 |

| Total | 24 | 1.000000 | 3.333333 |

This question is discussed on my forum at Wizard of Vegas.

What is the probability any given player wins $25,000 in the Showcase Showdown on the Price is Right?

As a word of explanation to other readers, let me explain what you're talking about. The Showcase Showdown is a game played on the game show The Price is Right. In the Showcase Showdown, each player takes his turn spinning a wheel which has an equal probability of stopping on every amount evenly divisible by .05 from .05 to 1.00 . If the player does not like their first spin they may spin again, adding the second spin to their first, however if they go over 1.00 they are immediately disqualified. In the event of a tie, each player will get one spin in a tie-breaker round, the highest spin wins. In the event of another tie, this process will repeat until the tie is broken.

The main purpose of the Showcase Showdown is to advance to the Showcase. However, there are also immediate cash prizes too, as follows:

- In the first round, if any player get a total of $1.00, whether in one sum or the sum of two spins, he shall win $1,000.

- In the first, and only first, tie-breaker round, if the wheel lands on $0.05 or $0.15, then the player shall win $10,000.

- In the first, and only first, tie-breaker round, if the wheel lands on $1.00, then the player shall win $25,000.

I explain the optimal strategy to the Showcase Showdown in column #101. Assuming that strategy is followed, the following table answers your questions and various other.

Showcase Showdown Statistics

| Question | Answer |

|---|---|

| Expected $1000 winners first round | 0.253790 |

| Probability 2-player tie | 0.113854 |

| Probability 3-player tie | 0.004787 |

| Expected $10000 winners second round | 0.024207 |

| Expected $25000 winners second round | 0.012104 |

| Expected total prize money | $798.45 |

| Probability any given player wins $1000 | 0.084597 |

| Probability any given player wins $10000 | 0.008069 |

| Probability any given player wins $25000 | 0.004035 |

The bottom row of the table shows that if you make the Showcase Showdown, without considering your order to spin, your chances of winning $25,000 is 0.004035, or 1 in 248.

This question is asked and discussed in my forum at Wizard of Vegas.

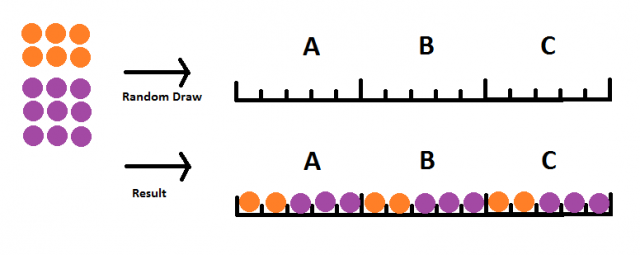

On the game show Survivor there were two teams, one with nine players and other six. They then got randomly placed into three new teams of five people each. Each new team was composed of three members of the former nine-player team and two from the former six-player team. What are the odds of that?

Let's call the former team of nine player team 1 and the one of six player team 2. The number of ways you could pick three players from team 1 and two from team 2 is combin(9,3)×combin(6,2) = 1,260. The total number of ways to pick five out of 15 players is combin(15,5) = 3,003. So, the probability the first team is split 3/2 in favor of team 1 is 1,260/3,003 = 41.96%.

If that happened, then team 1 will have six players left and team 2 four players. The number of ways you could pick three players from team 1 and two from team 2 is combin(6,3)×combin(4,2) = 120. The total number of ways to pick five out of 10 players left is combin(10,5) = 252. So, the probability the second team is split 3/2 in of favor team 1, given that the first team is already split 3/2 that way, is 120/252 = 47.62%.

If the first two new teams are split 3/2, in favor of the former team 1, then the final team will be split 3/2 among the leftovers.

Thus, the answer to your question is 41.96% × 47.62% × 100% = 19.98%.

Formulas:

combin(x,y)=x!/((y!*(x-y)!)

x! = 1*2*3*...*x

This question is raised and discussed in my forum at Wizard of Vegas.

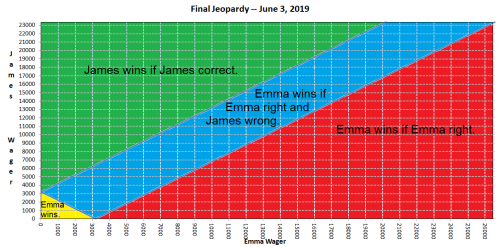

I think that James Holzhauer lost on purpose against Emma, in his last game. My evidence is that he had been wagering big every game up until then and suddenly he wagers low against Emma. I suspect the producers want Ken Jennings to host the show after Alex steps down. The show would be more dramatic if the host had the records in both shows and money won. Thus, they paid off James to throw the game.

Let me set the stage. On the June 3, 2019 James was within easy reach of breaking the record for total money won in regular games, which still stands at $2,520,700. James average win per game was much more than what he needed to break the record. So all eyes were watching on June 3 to see the record broken.

Instead, what happens is not only does James not break the record, but he loses. The winner, Emma, played a very strong strategic game as well as being good with the buzzer and simply answering correctly. She played just as James normally did. Going into Final Jeopardy the scores were:

- Emma — $26,600

- James — $23,400

- Jay — $11,000

In these situations, where second place has more than half of first place, and third place does not, it typically comes down to first and second place choosing to go high or low with their final wager. A high wager for first place is enough to lock in a win if correct. To be specific, two times the second place score less the first place score plus one dollar. That is exactly what Emma did with a wager of 2×$23,400 - $26,600 + $1 = $20,201. Most of the time, the first place player does this.

However, James didn't know what Emma would do when deciding his wager. The following table shows who would win according to what combination of wagers.

Click on image for larger version.

If Emma wagers at least $20,201 then she locks in a win if correct.

If Emma wagers low then she will win if either (a) James wagers low or (b) James wagers high and is wrong.

If James wagers high then he wins if (a) Emma goes high, Emma is wrong, and James is right, or (b) Emma goes low and James is right.

If James wagers low then he wins if Emma goes high and is wrong.

If perfect logicians were playing, both would randomize their decisions. However, rarely does the leader go low in these situations where he/she can be caught. If James anticipates Emma to go high, then he absolutely should go low. This way he doesn't have to get Final Jeopardy right to win, he just has to hope Emma blows it.

James actual bid was the correct amount to cover Jay if Jay bet everything and was right: $23,400 - 2×$11,000 - $1 = $1,399, which satisfied as a low wager for purposes of beating Emma.

If correct, James would get an extra $1,000 for coming in second, compared to third.

In conclusion, I completely reject the conspiracy theory that James threw the game. He played the right way and lost due to a combination of playing a strong competitor and what most people would call "bad luck."

External Links

- Jeopardy hall of fame

- James Holzhauer on Jeopardy — Discussion in my forum at Wizard of Vegas.

In Final Jeopardy, what is the optimal strategy if the second place player has more than 2/3 the money as the first place player? Assume the third place player is not a factor.

Let me get a disclaimer out of the way first. The following analysis is based on statistical averages. An actual player should make mental adjustments for how well he knows the Final Jeopardy category as well estimating the opponent's chances of getting it correct.

To answer your question, I first looked at four seasons of data from the Jeopardy Archive to see the four possible combinations of the first (leading) and second place (chasing) player getting Final Jeopardy right and wrong.

Final Jeopardy Scorecard

| Leading Player | Chasing Player Correct | Chasing Player Incorrect | Total |

|---|---|---|---|

| Correct | 29.0% | 25.5% | 54.5% |

| Incorrect | 17.7% | 27.8% | 45.5% |

| Total | 46.8% | 53.2% | 100.0% |

Before going on, let's define some variables:

x = Probability leading player goes high.

y = Probability chasing player goes high.

f(x,y) = Probability of the high player winning.

Let's express f(x,y) in terms of x and y from the table above:

f(x,y) = 0.823xy + 0.545x(1-y) + 0.468(1-x)y + (1-x)(1-y)

f(x,y) = 0.810 xy - 0.455x - 0.532y + 1

To find the optimal values for x and y, let's take the derivative of f(x,y) with respect to both x and y.

f(x,y) d/dx = -0.455 + 0.810y = 0

Thus y = 0.455/0.810 = 0.562

f(x,y) d/dy = -0.532 + 0.810x = 0

Thus x = 0.523/0.810 = 0.657

So, the high player should wager high with probability 65.7% and the low player should wager high with probability 56.2%.

Based on watching, I think the high player wagers high greater than 65.7% of the time, thus if I were in second place, I would go low.

If both players following this randomizing strategy, the probability the leading player will win is 70.1%.

Putting all theory aside, if you're leading, predict what the chasing player will do and do the same. If you're chasing, predict the leading player's action and do the opposite. This strategy goes for all such tournaments.This question is raised and discussed in my forum at Wizard of Vegas.

At the beginning of a Jeopardy round, why do some players, like James Holtzhauer, start picking from the bottom? Wouldn't it make more sense to warm up with the easier questions at the top, in part to ensure proper understanding of the category, which are sometimes tricky?

The reason is the Daily Doubles are placed in the bottom three rows 91.5% of the time. The following table shows their locations on the board over 13,660 Daily Doubles found.

Daily Double Location

| Row | Column 1 | Column 2 | Column 3 | Column 4 | Column 5 | Column 6 | |

|---|---|---|---|---|---|---|---|

| 1 | 5 | - | 3 | 3 | 2 | 3 | 16 |

| 2 | 280 | 137 | 216 | 167 | 207 | 140 | 1,147 |

| 3 | 820 | 442 | 677 | 658 | 643 | 472 | 3,712 |

| 4 | 1,095 | 659 | 982 | 907 | 895 | 627 | 5,165 |

| 5 | 787 | 403 | 670 | 671 | 613 | 476 | 3,620 |

| Total | 2,987 | 1,641 | 2,548 | 2,406 | 2,360 | 1,718 | 13,660 |

Source: J! Archive.

Here is the same data in the form of how often a Daily Double is found in each cell of the board.

Daily Double Probability

| Row | Column 1 | Column 2 | Column 3 | Column 4 | Column 5 | Column 6 | |

|---|---|---|---|---|---|---|---|

| 1 | 0.0% | 0.0% | 0.0% | 0.0% | 0.0% | 0.0% | 0.1% |

| 2 | 2.0% | 1.0% | 1.6% | 1.2% | 1.5% | 1.0% | 8.4% |

| 3 | 6.0% | 3.2% | 5.0% | 4.8% | 4.7% | 3.5% | 27.2% |

| 4 | 8.0% | 4.8% | 7.2% | 6.6% | 6.6% | 4.6% | 37.8% |

| 5 | 5.8% | 3.0% | 4.9% | 4.9% | 4.5% | 3.5% | 26.5% |

| Total | 21.9% | 12.0% | 18.7% | 17.6% | 17.3% | 12.6% | 100.0% |

The reason for searching for Daily Doubles is they are a good way to double your score. Most contestants will have a probability of about 80% to 90% of getting any given clue right. It is a great value to get even money on a wager you have an 80% to 90% chance of winning. A major reason James Holtzhauer won as much as he did was aggressive Daily Double searching and then going "all in" most of the time when he found them. It is also how he lost against Emma, when she employed the same strategy against him.

What is the best strategy to play the Race Game on the Price is Right, assuming the player has no idea of the prices of the prizes?

For the benefit of readers who aren't familiar with the game, here is a video of it.

I submit that the following strategy results in a minimum average number of turns. There are many strategies that would tie it, but I don't think any can beat it.

To use the strategy, label the four price tags as 1, 2, 3, and 4. Place them on the four prizes according to the history of how many you got right in the past, starting with the first turn on the left.

Race Game Strategy

| History | Prize 1 | Prize 2 | Prize 3 | Prize 4 |

|---|---|---|---|---|

| None | 1 | 2 | 3 | 4 |

| 0 | 2 | 1 | 4 | 3 |

| 0,0 | 3 | 4 | 2 | 1 |

| 0,0,0 | 4 | 3 | 1 | 2 |

| 0,0,2 | 3 | 4 | 1 | 2 |

| 0,0,2,0 | 4 | 3 | 2 | 1 |

| 0,2 | 2 | 3 | 4 | 1 |

| 0,2,0 | 4 | 1 | 2 | 3 |

| 0,2,1 | 2 | 4 | 1 | 3 |

| 0,2,1,0 | 3 | 1 | 4 | 2 |

| 1 | 1 | 3 | 4 | 2 |

| 1,0 | 2 | 4 | 3 | 1 |

| 1,0,0 | 3 | 1 | 2 | 4 |

| 1,0,0,0 | 4 | 2 | 1 | 3 |

| 1,1 | 1 | 4 | 2 | 3 |

| 1,1,0 | 2 | 3 | 1 | 4 |

| 1,1,0,0 | 3 | 2 | 4 | 1 |

| 1,1,0,0,0 | 4 | 1 | 3 | 2 |

| 2 | 2 | 1 | 3 | 4 |

| 2,0 | 1 | 2 | 4 | 3 |

| 2,1 | 1 | 3 | 2 | 4 |

| 2,1,0 | 4 | 2 | 3 | 1 |

| 2,1,1 | 1 | 4 | 3 | 2 |

| 2,1,1,0 | 3 | 2 | 1 | 4 |

The next table shows the probability it will take 1 to 5 turns of the 24 possible ways to arrange the four price tags.

Turns Required

| Turns | Number | Probability |

|---|---|---|

| 1 | 1 | 4.17% |

| 2 | 4 | 16.67% |

| 3 | 8 | 33.33% |

| 4 | 9 | 37.50% |

| 5 | 2 | 8.33% |

| Total | 24 | 100.00% |

Taking the dot product, the average number of turns needed, under this strategy, is 3.29167.

This question is asked and discussed in my forum at Wizard of Vegas.

I heard something about the rule of two-thirds in wagering for Final Jeopardy. Do you know about it?

Yes. It refers to a change in strategy for the second-player player if he has more than 2/3 the score of the first-place player.

Let's simplify the situation to a two-player game, as follows:

- Situation A: Second place has less than half of first place.

- Situation B: Second player has between 1/2 and 2/3 of first place.

- Situation C: Second place has more than 2/3 of first place.

Before going further, let me reminid the reader of a Jeopardy rule change pertaining to ties after Final Jeopardy. No longer do both players advance, but there is now a sudden death tiebreaker question. Here is such a situation.

Situation A

Let A=$10,000 and B=$4,000

Player A should not risk losing by betting no more than A-2B-1. If he doesn't feel confident in the category, he can bet $0. Either way, he ensures winning. In this case, A should bet between $0 and $1,999.

Player B has no hope, unless A bets too much and misses. Here, B should consider the score of third place and try to stay above him, if he can, winning $2,000 for second place, as opposed to $1,000 for third place.

Situation B

Let A=$10,000 and B=$6,000

The strategy for A is to expect B to bet everything and to bet enough to cover 2B if correct. However, to be safe, he shouldn't bet too much as to fall below B if incorrect. In this case, he should bet at least 2B-A+1 and A-B-1. In this case, the range is $2,001 and $3,999.

The strategy for B is to get at least enough to pass A if correct and up his entire score. In this case, $4,001 and $6,000.

If both players do as expected and follow this strategy, the only way player B can win is if A is wrong and B is right. The probability of this is about 19%.

Situation C

Here things get more complicated and involve more game theory and randomization.

Let A=$10,000 and B=$7,000.

Before going further, it is important to estimate the probability of the Final Jeopardy clue being answered correctly. Based on seasons 30 to 34, the first place player was correct 52% of the time and second place 46%. However, these probabilities are positively correlated. Here is a breakdown of all four possibilities:

- Both correct: 27%

- First place correct, second place incorrect: 25%

- First place incorrect, second place correct: 19%

- Both incorrect 29%.

Despite a Jeopardy average of 49% for the first two players, the probability of both being right or both being wrong is 56%.

Of course, these can change based on the category, but let's keep things simple and use the probabilities above.

In this situation, player B does not have to depend on A being wrong and B being correct. He can bet low, say $0, ensuring a win if A is wrong. In other words, if A bet enough to cover B, if correct, then he would risk falling below B if wrong and B bet $0.

However, if A predicted B would bet low, say $0, then A could lock in a win by also betting $0. Both player basically have a choice to make, to go low or high. A should wish to wager the same way as B and B should wish to wager the opposite way of B. If both players were perfect logicians, they would randomize their decisions.

In this case, a high bet by A should be 2B-A+1 to A-B-1, the same as for situation B. In this case $2,999 and $4,001. A low bet by A should be $0.

A high bet by B should be the same as for situation B, bet enough to pass A if correct. In this case, $3,001 and $7,000. A low bet by B should be $0.

Forgive me if I skip the math and get right to the randomization strategies for both players.

Player A should go high with probability 62.3% and low with probability 37.7%.

Player B should be high with probablity 61.2% and low with probability 38.8%.

Assuming both players following this randomization strategy and the probability pairings of being correct stated above, the probability of player A winning is 65.2%.

If player A had more than 2/3 the score of player B, his probability of winning would go up to 81.0%.

Both players should keep in mind the significance of the 2/3 rule when wagering on Double Jeopardy.